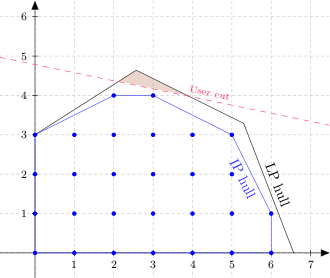

Let me start with an illustration of the feasible region of a hypothetical two variable pure integer linear program.

The polygon bounded in black (including portions of the two axes) is the feasible region of the linear program relaxation (also known as the linear hull or LP hull). This is what you get if you relax the integrality restrictions while enforcing all functional constraints and variable bounds. The blue dots are the integer lattice points inside the polygon, i.e., the feasible solutions that meet the integrality restrictions. The blue polygon, the integer hull or IP hull, is the smallest convex set containing all integer-feasible solutions. The IP hull is always a subset of the LP hull. Assuming a linear objective function, one of the vertices of the IP hull (all of which are lattice points) will be the optimal solution to the problem.

User Cuts

In CPLEX parlance, a cut is a constraint that is not part of the original model and does not eliminate any feasible integer solutions. Cuts are typically added for one of two reasons: to separate a node in the search tree into two nodes representing smaller subproblems; or to strengthen bounds by paring away some of the fat in the LP hull (the "fat" being the set difference between the LP hull and the IP hull). CPLEX automatically adds a variety of cuts, of which the most famous is arguably the Gomory cut. A user cut is simply a cut added by the user, rather than by CPLEX, frequently but not necessarily inside a callback. The next figure illustrates a user cut (red dashed line).

The shaded triangle shows the portion of the original LP hull that is removed by the user cut. Note that no integer solutions were harmed in the construction of this diagram.

Lazy Constraints

In contrast to user cuts, lazy constraints are allowed to pare away integer-feasible solutions. The next figure shows an example of a lazy constraint (green line).

The shaded polygon is again the portion of the LP (and, in part, IP) hull removed by the constraint. Note that, this time, three integer points are being rendered infeasible. The implication of using lazy constraints is that the problem originally specified (first figure) is actually a relaxation of the true problem; adding the lazy constraints to that initial problem gives you the problem you really want to solve.

Why use lazy constraints? Three reasons come to mind. First, a full specification of the model may involve an extremely large number of constraints, many of which you anticipate will be redundant, or at least not binding near the optimal solution. If you knew in advance which of those constraints were redundant, you could simply omit them, but frequently you cannot identify redundant constraints at the outset. Many modern solvers allow you to put a portion of the constraints (what we are calling the "lazy" ones) in a pool, where they are initially not a part of the active model. As solutions are generated, the solver checks to see if any lazy constraints are violated and, if so, adds them to the active set. Lazy constraints that were previously added but have not been binding for a while may be returned to the pool. This is an attempt to reduce the computation time per iteration, and possibly the memory footprint of the problem.

A second and closely related reason to use lazy constraints is that there may in fact be so many constraints that you cannot afford the time or memory to generate them all at the outset. One approach to formulating the Traveling Salesman Problem as an integer program requires the use of subtour elimination constraints. The number of such constraints grows exponentially with the number of nodes in the network. While they are easy to specify in a computer program, for decent size networks you simply cannot afford to list them all. An alternative is to treat them as lazy constraints and generate them on the fly, through a callback.

The third reason to use lazy constraints, specifically in a callback, is that you may not be able to identify them at the time the model is specified. The feasibility and optimality cuts generated during Benders decomposition fall into this category. You discover them by solving one or more subproblems at certain points in the search for the solution to the master problem.

Why Two Categories?

We've observed that the distinction between user cuts and lazy constraints is fundamentally whether or not they can cut off otherwise feasible solutions. The reason we care about that is somewhat arcane. CPLEX and other solvers do a variety of problem reductions, both at the outset (presolving) and on the fly during the search process, to tighten the feasible regions of node problems and expedite the solution process. Some of those reductions involve solutions to dual problems. User cuts do not affect the reductions, but if you hide some actual constraints in a pool of lazy constraints (or generate them after the reductions have been done), the dual solutions used in the reductions are incomplete (lacking dual variables corresponding to the constraints that are not yet included). This can lead to incorrect results.

If CPLEX sees the user adding lazy constraints (or, by virtue of the presence of a lazy constraint callback, threatening to add lazy constraints), it automatically turns of reductions that might be incorrect. I recently ran a program that uses CPLEX (12.4) with a lazy constraint callback. The output contained the following message:

Lazy constraint(s) or lazy constraint callback is present.

Disabling dual reductions (CPX_PARAM_REDUCE) in presolve.

Disabling non-linear reductions (CPX_PARAM_PRELINEAR) in presolve.

Disabling dual reductions (CPX_PARAM_REDUCE) in presolve.

Disabling non-linear reductions (CPX_PARAM_PRELINEAR) in presolve.

As user, you can turn off these reductions manually, but including a lazy constraint callback (or adding constraints to the lazy constraint pool during model construction) will induce CPLEX to do it for you.

So What Got Clarified?

A few versions ago, IBM refactored their implementation of cut callbacks. Of particular interest is the conditions under which cut callbacks are called.

- User cuts may be checked and applied at any time during the optimization process. This includes the cut generation phase at each node. They are not guaranteed to be checked at the time a candidate solution (potential new incumbent) is found, however. Calling a constraint a user cut tells CPLEX that it cannot cut off a feasible solution, so there is no need to test it each time a feasible solution is found.

- Lazy constraints, on the other hand, are guaranteed to be checked each time CPLEX finds a candidate solution, regardless of how the candidate is found (node LP solution, rounding, various heuristics, inferred from the position of the planets ...). This is important, because a lazy constraint might make the candidate solution infeasible in the full problem. (There is one small exception, at least as of version 12.4 and earlier: a candidate solution injected by the user through a heuristic callback is not checked against the lazy constraints. The user is somewhat charitably assumed to know what she or he is doing, so a user-injected solution is assumed to satisfy all lazy constraints.) What changed in the refactoring of the cut callback interface is that lazy constraints are checked only when a candidate solution is found. They are not routinely checked at every node.

So here, at last, is the clarification. It turns out that it is legal to add a lazy constraint in a user cut callback (gasp!) as long as the user includes a lazy constraint callback or otherwise ensures that the necessary reductions are turned off. Since user cut callbacks are not called when candidate incumbents are found, at least for Benders decomposition this would suggest adding the same cut generation logic in both a lazy constraint callback (mandatory) and a user cut callback (optional).

I'm told that the next (?) release of official documentation for CPLEX will include this clarification. To close, I'll note that if you intend to sneak lazy constraints into a user cut callback, the safest way to do this may be to include a lazy constraint callback, even if it does nothing (just returns with no action). That way, CPLEX will automatically turn off everything that needs to be turned off. As I said, you can use parameter settings to turn off reductions manually, but which reductions will need to be turned off could potentially change in a new version. I, for one, am too lazy (pun deliberate) to keep up with that stuff.

Hi there, I am doing some experiment with lazy constraints and find your post just in time! Actually I am quite interested in what CPLEX does when it finds a potential incumbent solution violated by some lazy constraints, since it is not clarified in the manual, do you have any idea about this?

ReplyDeleteThanks,

Y.Li

Lazy constraints (and user cuts) function like regular model constraints, other than being held in a pool. When a possible incumbent is found, the pool of lazy constraints is checked, and if the candidate violates any of them it is rejected. I'm pretty sure that the lazy constraint it violated then becomes a member of the active constraint set (the ones used to generate a basis) for a while.

DeleteIf the candidate satisfies all existing lazy constraints, and if there is a lazy constraint callback, CPLEX calls the LC callback. Should you add a lazy constraint in the callback that cuts off the candidate, CPLEX rejects the candidate and (as I understand it) adds the new lazy constraint (either locally or globally, depending on how you added it), makes it active, and starts over at the node (solving the node LP and, in the process, repeating the cut cycle -- so if you also have a user cut callback, I think it will be called again at the node).

That's what I think happens. I'm not actually privy to the (proprietary) CPLEX code. From time to time someone at IBM explains this to me, and then I promptly forget all the gory details. :-)

Dear Prof. Rubin,

DeleteI guess that you probably forget this gory detail. But I'll give it a shot and ask it anyway.

What if I would add a (lazy-)constraint in the [lazy constraint] callback which does not "cut off the candidate"... Then does CPLEX reject the incumbent candidate (based on the fact that a (lazy cut) constraint is just added; which is based on this last incumbent candidate)? Or it [CPLEX] checks one last time whether this constraint that is added just now is violated [by the last incumbent candidate] or not?

Thank for this wonderful bloq!

Halil Sen

In my "little" experiment, I conclude that even if the cut created (and about to added to the model) in the lazyconstraintcallback is not violated by the [incumbent] candidate (per se ), it (still) is added to model and causes unnecessary iterations (and cuts).

DeleteHowever (unfortunately) I'm still not convinced. So, I would appreciate if you have any know-how about this situation.

h.

Halil,

DeleteI'm currently at a conference, so it will be a week or so before I can investigate this more thoroughly. I'm fairly confident that adding a cut which the proposed incumbent satisfies does not lead to rejection of the incumbent. My understanding (possibly flawed) is that when the callback offers up a cut, CPLEX adds that cut to the active model and also consults a table of lazy constraints to see if any others are violated (activating any that are). I believe it then reoptimizes the node and, if an integer feasible solution is found, calls the callback again. I don't know whether CPLEX tests the new cut for violation before activating it or just assumes it should be active. Certainly the developers do not expect users to knowingly add cuts that fail to cut off the proposed incumbent.

Also, and again I'm not positive about this, I believe that each time the callback adds a cut, it is called again (at the same node), until it finally returns without adding a cut. IBM refactored the callback interface a few minor versions ago. Prior to the refactoring, one used the now deprecated CutCallback class for both user cuts and lazy constraints, and the pattern was as I described -- keep calling it until a call produces no changes. I'm not positive that behavior remains, but I think so.

Thank you for the prompt and detailed explanation. I would like to hear more if you find anything after the conference.

DeleteMy understanding is that Cplex "just assumes" the cut should be active.

I have a toy problem which minimizes an objective function without any constraints [at first] and adds a lazy cuts in each callback in the following form

obj >= 1/1; obj >= 1/4; obj >= 1/9; obj >= 1/16, so on... one-by-one.

It is clear that second and remaining cuts are unnecessary and loose however they make CPLEX go on and on until the right-hand-side value becomes infinitesimally small (total of ~4400 lazycallbacks).

On the other case if I add same cut in each callback (i.e., obj >= 1 on every callback ) it stops after the second cut.

For the pattern you described, I'm fairly positive that the "usercutcallback" behaves as you said however I guess that is not the case with "lazyconstraintcallback"s (I'm not sure though).

Hope you'll have an inspiring conference.

When you add cuts, CPLEX checks for repetitions. That probably explains the second case. In the first case, CPLEX keeps seeing new cuts, which explains why it keeps calling the callback. Accepting the cuts is not the same as adding them to the active constraint set. CPLEX has a separate table of lazy constraints. So when you add a loose constraint, it's possible CPLEX just trusts you that it will be binding and adds it to the active set, but it's also possible that CPLEX first adds it to the lazy table, then checks if it is binding, sees it is not, and leaves it out of the active set (but calls the callback again in case you have more constraints to add).

DeleteI ran a small experiment, and based on that I believe the following is true of lazy constraint callbacks:

Delete1. If the callback adds a cut that CPLEX has not seen before, it will be called again at the same node (and again and again, until it offers up nothing new).

2. If the callback adds a cut that CPLEX has already seen, it will _not_ be called again at that node.

3. Adding nonbinding cuts did not reduce node throughput at all in my experiment. Unfortunately (a) all the cuts were added at the root node -- I think CPLEX had the optimal solution by the time it started branching -- and (b) the number of added cuts was pretty small. So I can't be certain that nonbinding cuts added in the callback are held out (in the lazy cut table, or whatever they call it), but I suspect that's the case.

Thank you [again] for your time and effort.

DeleteThanks for your input!

ReplyDeleteY.Li

Hi, the information you gave is pretty clear and helpful. I am using the Lazy constraints currently as well and have one problem:

ReplyDeleteNormally, in Benders Decomposition, an optimality cut or feasibility cut will be added based on optimal solution of RMP. But when the lazy constraint is used by callback, the cut will be added when a candidate node in search tree is found. Should we call this Benders and Branch and cut instead of Benders decomposition?

I've seen out referred to as the "one tree" version of Benders decomposition (because it uses a single search tree, in contrast to the traditional version's initiation of a new tree after each cut is added). I don't know who coined the phrase, but I find it a good description.

DeleteHi, your reasons about using lazy constraints seem interesting but actually I need a reference for these statements since I want to use them in my research work. Can you propose anything to me? Thanks.

ReplyDeleteIf you're looking for an authoritative source, I don't think I can help. For the second reason (you'll die of old age generating all the cuts up front), it's not too hard to show that the number of possible subtours in a TSP network is typically huge (and, if you start with a complete graph, *really* huge). I suspect that if you look at source material on TSPs, you'll find something about full enumeration of subtour elimination constraints being scary. (Don't know if that made it into Bill Cook's widely acclaimed book or not). For the third reason (you won't know the cuts in advance), you might want to look at Moreno-Centeno and Karp, "The Implicit Hitting Set Approach ...", OR 61 (2), 2013.

DeleteThanks, my main concern is the traveling sales man problem. Actually for using lazy constraints, it is required to solve the same problem several times. (each time with a new set of constraints) How we can prove that solving the same problem hundreds of time will take less time comparing to listing all the subtour elimination constraints and solving the problem at once? I know that this lazy concept is a novel and good idea but I need to prove its benefit in my research work. Thanks.

DeleteIn many real-world problems, you cannot list all the subtour elimination constraints -- you won't have enough memory. On the other hand, for very small problem instances, I would not be shocked to find that listing all the subtour constraints is faster than using a callback.

DeleteSo I very much doubt that you can prove either method is superior to the other in all cases. At best, you can talk about average performance, perhaps for networks of a particular size or with particular characteristics (complete graph, sparse graph, maximum degree K, ...). If you really need to show that one method outperforms the other, I would suggest running experiments (solve the same problem both ways and track timing).

This comment has been removed by the author.

ReplyDeleteHi, thanks for your information. I am a junior student and starting to use CPLEX for VRP. I am using Lazy constraints for subtours and tours exceeding capacity but I have a problem. Although, there are tours exceeding capacity in final solution, lazy constaints does not called. I could not find any reasons why this is happening. Have you ever faced such problem?

ReplyDeleteNo, I cannot recall ever having an incumbent accepted without first having the lazy constraint callback called (assuming, of course, that I am using a lazy constraint callback).

DeleteI suggest you add some print statements to your lazy constraint callback, showing the proposed incumbent (and its objective value) each time the callback is called. If the callback never prints the final solution, something is seriously wrong. On the other hand, if it does print the final solution, then your callback code contains a bug (resulting in a failure to cut off that solution).

It prints the final solution but it finds lower and upper bound equal. Can it be the reason why the callback is not called?

DeleteIf you put the print statement in the callback, and it prints the final solution, then the callback was called with that (proposed) incumbent.

DeleteDr. Rubin,

ReplyDeleteThank you for all the information on this blog, it is really helpful. I am working on a pretty sizeable stochastic MIP, using Benders decomposition as the main approach to solve it. I was hoping you could share your experience about some points concerning lazy cuts. Let me preface by saying that I have a minimization problem, there is no need for feasibility cuts within Benders, and that I am using Gurobi. I have a master problem that already contains some optimality cuts (from doing a couple of iterations of Benders with the LP relaxation of the master problem), and I am giving it to Gurobi’s B&B. Let’s call this problem BMP. This is a similar setup to what you described in one of your other posts (Benders decomposition then and now).

1. When a lazy constraint is added, the problem originally given to the B&B changes. It is not BMP anymore due to the constraint added. This means that the lower bound of the B&B, while still valid, might not be as tight as possible. I think I know the answer to this is negative, but do you have any indication that the LB of the B&B is recomputed at this point?

2. In every case that I ran so far, the output of the B&B procedure indicates that an incumbent for the B&B is not found until the very end of the procedure when the B&B terminates. On one hand, this makes sense because if a MIP solution for the master problem becomes the incumbent in the B&B, it also satisfies the optimality condition for Benders in that no more cuts are generated. On the other hand, I expected that at least in some of the cases I solved, I would obtain an incumbent solution (with an unfavorable cost) well before the B&B terminates. This would be the result of a lot of branching cuts. I wanted to ask you, first, if you think my logic is correct, and second, if you have ever experienced a similar result in your runs of Benders with callbacks.

Thank you for any comments you might have!

Goran

Goran,

DeleteI'm not a Gurobi user, and there are some fundamental differences between Gurobi and CPLEX. I'll answer in the context of CPLEX, and hopefully the answer will apply to Gurobi (but no guarantee).

On point 1: to the best of my knowledge, when a lazy constraint is added via callback in CPLEX, the node LP for the current node is updated. If the current node is providing the lower bound, then it will change immediately. Lazy constraints (and user cuts) can be designated as local (to the subtree rooted at the current node) or global. If you make the cut global, it will be added to all surviving nodes, but I don't know if their LPs will be resolved immediately. (I tend to doubt it, for efficiency reasons.) So the LB may not be recomputed until the solver decides to process the node where it currently occurs. In a pure best bound search, this will be immediate, but in a more typical search I don't know how quickly the bound will update.

On the second point, suboptimal incumbents typically do occur during Benders decomposition, so what you are seeing is a bit unusual (and possibly suspicious). There may be something specific in the structure of your model that causes the first feasible solution to be the winner. If you are seeding BMP with either a starting incumbent or a tight cutoff for the objective, it may be that the only solution better than your incumbent/cutoff is the optimum. That said, I'd probably take a close look at the code. :-)

Thank you for your helpful comments, Dr. Rubin!

DeleteHi,

ReplyDeleteI'm trying to use lazycallback for my benders decomposition and it seems I can't properly pass some of my variable values from my master problem to the lazycallback. Is there any small instances for BD with lazycallback I can use? (I do have the ilobendersatsp example, but it's a little bit complicated and different from what I have in my BD).

Thanks

Check out this post: http://orinanobworld.blogspot.com/2014/08/updated-benders-example.html.

DeleteThanks!

DeleteHi Prof. Rubin,

ReplyDeleteI loved the post, is a shame that such useful blogs are rare... I'm currently coding in C++ via Concert Technology both exact and heuristic algorithms to solve large scale MILP including routing variables, hence requiring (integer and fractional) cut callbacks.

I have tried to encapsulate my code to facilitate the inclusion of new formulations and algorithms. However, my question is: Do you think it would be convenient to make a class for all different callbacks (for DFJ, GFSEC, etc) or you think that just including them as methods on my solvers' class would be enough?

Hope you wouldn't find the question rather bizarre lol.

Best regards

Y

I don't find the question at all bizarre, although it's a bit hard for me to answer without seeing your code (and, trust me, I'm *not* asking to see your code -- bad enough I have to read my own ;-)). Also, my coding skills are, shall we say, not professional grade.

DeleteI think my inclination would be to create one class extending IloCplex::UserCutCallbackI (and another extending IloCplex::LazyConstraintCallbackI, if I were also using lazy constraints). Pass the constructor any information it needs from the model (environment, variables, ...). Either in the constructor or via an add() method (and perhaps a remove() method), let the user pass into the callback class as many cut generators as the user may wish. The cut generators would be methods that would access the model and its current solution and either cough up an instance of IloRange or return null if no cut was found.

Hope that makes sense.

Dear Professor Rubin, this blog is really amazing and helped me understand use of lazy constraints better. I am trying to solve VRP by adding capacity & sub-tour elimination constraints iteratively. As the number of nodes increases, the the number of cuts added is huge, slowing down the process. Is there anyway to remove some of the cuts while using lazyconstraint callback. - Thanks Raol

ReplyDeleteRaol,

DeleteI'm glad you find the blog useful. The add() method in a lazy constraint callback comes in two versions, one of which takes a second (integer) argument. That argument tells CPLEX whether you want the cut to be "purgeable". If you specify the value (UseCutPurge in the JavaAPI) that says yes, CPLEX will drop the constraint if at some point it no longer thinks the constraint is likely to be binding. Note that this raises the possibility the callback will have to recreate the same constraint at a later date.

Thanks a lot!

ReplyDeleteHi Professor Rubin,

ReplyDeleteI'm working on Bender's decomposition of a MILP on the Julia interface with JuMP package on a Gurobi solver. In the example here, the callback function of lazy constraints is called when the master problem reaches an integer feasible solution(of an iteration). However, the function should ideally be called only at an optimal solution of the iteration. Could you suggest the method to call the function at optimal solutions for each iteration of master problem model? Following is the description of the code:

#loading of the solver and JuMP

loading of master Problem

function addBendersCut(cb)

#subproblem loading and solving

#test for global optimum

#lazy constraints

end

addlazycallback(masterProblemModel,addBendersCut)

statusMP=solve(masterProblemModel)

Sorry, I"ve never used Gurobi, JuMP or Julia, so I have no idea. I don't even know which callbacks Gurobi provides. Your phrase "optimal solution of the iteration" confuses me a bit. If you mean that only want to invoke the callback for an integer-feasible solution that is an optimal solution to the node LP, I would expect that to be standard behavior for the solver. (That's what happens with CPLEX for most integer-feasible solutions, excepting those found by heuristics.) If you mean you only want the callback invoked when the master problem has been solved to what the solver thinks is optimality, you shouldn't be using callbacks at all. The approach there is solve (normally) - test solution - generate cut and add to master - solve again (until a winner is found).

DeleteThank you Prof. Rubin,

ReplyDeleteYour response helped me and my prof think deeper on it and realize the idea that we are solving only 1 Master problem and hence we can't solve it to optimality before any iteration(it would be optimal only at the last stage ). The unexplored branch and bound tree is saved when callbacks are called.

So, for a Minimization MILP,

how do we update the lowerbound obtained from the problem(upperbound is from dual sub problem):

by the solution values of the un-fathomed non-integer leaves of the solution tree(of master problem) which have the best bound when the callback is called?

For the "one-tree" (callback) approach, CPLEX maintains the correct lower bound. There is nothing for you to do in that regard.

DeleteThanks for this helpful post. You mentioned that heuristic solutions won't be checked over the pool of Lazy constraints since they are expected to be feasible. However, in Benders implementation, the cuts added using a heuristic solution can define a lower bound for artificial variables, and so the branch and bound. In other words, by a heuristic solution for integer MP (master problem), we might not have a solution for real variables of SP (subproblem). To give stronger lower bound in the root node, we may solve SP for the given heuristic solution, generate and add related cuts to MP. If it is not done by lazy call back, how can we add them to MP in the root node?

ReplyDeleteThanks.

Jess: First, just to be clear, let me emphasize that heuristic solutions generated by CPLEX _are_ checked against lazy constraints; it's only heuristic solutions generated by you in a heuristic callback that are not checked (at least, as of the last I heard -- this might be subject to change).

DeleteIf I understand the question correctly, this is what I would do. Since it's Benders, I assume you have a lazy constraint callback (LCC). If I generated a candidate solution in a heuristic callback (HC), before adding it (using setSolution in the Java API) I would first run it through the cut generator in the LCC. If the cut generator returned a feasibility cut, I would queue that somewhere in program memory; the next time the LCC was called, it would check the queue and add any cuts it found there (emptying the queue in the process). If the cut generator agreed that the integer part of the heuristic solution was okay but generated an optimality cut because the value of the surrogate real variable (the SP contribution to the overall objective) was too charitable, I would substitute the value of that variable that the cut generator came up with and inject that solution (but not queue the optimality cut). This applies at all nodes, including the root.

One thing people tend to forget is that there is nothing stopping various callbacks from communicating with each other through program global memory.

Hi Mr. Rubin. Thank you for this helpful blog, it is a pleasure to have experts share their knowledge for the broad audience. You refer to the legality of adding lazy constraint from a user cut callback. However, when I call cplex.addlazyconstraint(...), cplex crashes. Do you have any recommendation on how to add a lazy constraint from a user cut callback? Thanks!

ReplyDeleteCalling cplex.addLazyConstraint() (rather than just addLazyConstraint()) means that you are trying to modify the model itself. Modifying the model while a solve is in progress is illegal and likely causes the crash. Each callback class has it's own add methods, which add the cuts/lazy constraints to the solver without modifying the original model. So if you want to add a lazy constraint from a user cut callback (and you have a lazy constraint callback already attached), you can just use the add() or addLocal() method in the user cut callback. You do not need to signal that it is a lazy constraint rather than a user cut.

DeleteThanks so much for your sharing, it really helps me a lot.when I tried to use Java to call CPLEX and use callback functions to solve a VRP problem with branch-and-cut ,some problems emerged.I took out the subtour elimination constraint from the original problem, and used it as usercut and lazyconstraint .When I only used it as a lazyconstraint,the algorithm worked out very well, but when I used it as both usercut and lazyconstraint Java program couldn't stop, it kept calling usercutCallback at node0 and wouldn't make branch. I really don't know what the problem is.

ReplyDeleteDoes cplex loop calling usercutCallback at each non-integer node until no usercut are violated, and then make branch? If so, can I set the maximum number of calls at each node? Maybe just one time is ok.

Another problem is why it kept generating the same cut. I think when cut A has been already added,even if I generate A again in the next loop, it should have been satisfied so that no usercut is violated and the loop should be stopped.

At each node, CPLEX will call the user cut callback repeatedly until the callback returns without adding a cut. When it sees that no cut was added, it moves on. As far as I know, if the callback returns the same cut it added on the previous call, CPLEX will call it again. So the way to stop further calls at a node is to exit the callback without attempting to add anything.

DeleteI don't know of any way to force CPLEX to limit the number of times the callback is called at a given node. It should be possible to do that within your code. There's a method you can call to get the ID number of the node at which the call is occurring. So you can count the number of times a given ID number has shown up, and when it exceeds your limit you can return with no new cut and CPLEX will move on.

To your last point, I believe that CPLEX will recognize that a cut has repeated and not add a duplicate copy. I'm not sure why it doesn't stop in that case. I'm also surprised that your callback would keep generating the same cut. I think CPLEX updates the node solution between calls to the callback, so the next call should be with a node solution that satisfies the most recent cut. You might want to check your cut generating code.

Thank you so much.I think I find the problem due to your help.

DeleteHi,

ReplyDeleteIf I understand correctly, a lazy constraint call is made at arbitrary (integer) leafs of the branch-and-bound tree - whichever it happens to land on currently. My question is: is there a way to make this call only at the optimal leaf given the cuts added so far?

Thanks.

The calls occur at arbitrary nodes -- not necessarily leaf nodes (at least not leaves of the complete tree). The nodes where the calls occur have not yet been separated into children, so I suppose they are temporarily leaves. The key is that CPLEX thinks it has a possible new integer-feasible incumbent solution when it calls the callback.

DeleteTo apply the callback only at the "optimal" leaf, you have to go back to the way Jack Benders proposed his method. Solve the current model to optimality. Pass the optimal solution to the subproblem. (This would *not* be via callback.) If the subproblem coughs up one or more constraints, add them to the master model (as ordinary constraints, unless you are generating so many that you need to make them lazy to conserve memory). Then solve the modified master problem, starting from the root node, and repeat.

Thank you for the response. What you described (i.e., the Benders procedure) is what I have been doing, but then I saw the CPLEX example file ilobendersatsp.cpp which seems to be implementing 'Benders' via lazy calls, which confused me a little. In addition, a reviewer for a scientific journal suggested I implement Benders via lazy cuts so that I won't have to rebuild the Master B&B tree anew every iteration. The trouble with that approach I guess is that it doesn't use the optimal integer solution given the current model, so maybe what you save by maintaining a single B&B tree is lost due to using suboptimal integer points when deriving cuts. When I actually implemented both approaches for my current problem, 'classical' Benders (without lazy calls) seemed to work better. So my original question was motivated by finding a way to combine the advantages of both approaches. I'd be very grateful if you have any further thoughts on how to maybe do that.

DeleteThanks again!

I reviewed both the original approach and the callback approach in an even older post (https://orinanobworld.blogspot.com/2011/10/benders-decomposition-then-and-now.html). You're correct that there is no guarantee the callback method is faster than solve-cut-repeat, although my gut feeling is that it usually is. FWIW, I always use the callback method.

DeleteAs far as combining them, there are a couple of reasons why holding off until the optimal leaf node has been found won't work. The main one is that by the time you conclude node A is "optimal" (because the best bound converged to the value at that node), a different node (B) containing the true optimal solution will have been pruned, because the bound at B is worse than the bound at A. This is not an issue in the original Benders method, because you rebuild the tree after adding a cut, but it would be a problem (catastrophe?) if you were trying to reuse the current tree. It would require the solver to retain pruned nodes, which would eat a lot more memory than what is currently consumed. In any case, CPLEX will not do this.

I was not aware of the blog post you linked to, which addresses my issue pretty much directly.

ReplyDeleteI am very grateful for your time and for your responses.

You're welcome.

DeleteDear Prof. Rubin,

ReplyDeleteI am a beginner at both using Lazy constraints and benders decomposition. So please pardon me if my questions sounds quite simple.

May I ask you:

-What is global and local lazy/user cut constraints?

- When we add a lazy constraint at a node using add() does it count as a global lazy constraint and it will kept and called at the next nodes of the tree?

- you mention that in order to avoid keep calling the same cuts, we should exit the callback without attempting to add anything. May I ask you what is the command line in CPLEX for doing this?

-is there a way to print out the cut added as lazy or user cut at a node?

Best regards,

Hi Mahya,

ReplyDeleteIn what follows, anything relating to CPLEX functions will be answered in terms of the Java API, but something similar will apply to other APIs.

"What is global and local lazy/user cut constraints?" Global cuts are cuts that apply to the entire search tree. Local cuts only apply to the node at which they are added and its descendants (i.e., the subtree rooted at the node where they are added).

"When we add a lazy constraint at a node using add() does it count as a global lazy constraint and it will kept and called at the next nodes of the tree?" With legacy callbacks, add() adds a global constraint/cut and addLocal() adds a local constraint/cut. With generic callbacks, addUserCut() has a boolean argument to signal whether the user cut is local or global; separate methods (rejectCandidate() and rejectCandidateLocal()) are used to add global/local lazy constraints.

"You mention that in order to avoid keep calling the same cuts, we should exit the callback without attempting to add anything. May I ask you what is the command line in CPLEX for doing this?" There is no command. Your callback is executing code in a function. Just return from the function without adding anything. In most APIs, this can be done either by calling a return statement or just by reaching the end of the function code.

"Is there a way to print out the cut added as lazy or user cut at a node?" This is a bit language-specific, but yes. In Java, you are adding an instance of class IloRange. You can just pass it as an argument to a print statement. CPLEX automatically overrides the default toString() method to produce a human-readable string. To make the constraint more readable, be sure to assign meaningful name strings to your variables, either in the variable constructors or using the IloNumVar.setName() method (or its equivalent in your favorite API).

Dear Prof. Rubin,

ReplyDeleteI would like to first thank your for creating this blog and taking the time to answer the comments. I personally learn a lot going through your blog and also reading the comments.

I have read at one of your comments, that you mentioned you use only Lazy constraints, not user cut, when it comes to implement benders decomposition. May I ask you is there a particular reason?

My second question which is related to the first question is about using User cut within the the framework of benders decomposition. Within my code, I basically use exactly the same code for the user cut that I use for the lazy constraint. But, it seems that the same cut will be added infinitely at a node when the user cut is called. I mean sounds it is fallen at a loop. But, when I ignore the user cut and I only use the lazy constraint, the code works perfectly and it finds the optimal solution. I would like to know what could be the reason?

Best,

Alain

Alain,

ReplyDeleteI'm glad you find the blog useful. Regarding your first question, let me start by saying that I would use both callbacks with different cuts in each (Benders cuts in the lazy constraint callback, bound-tightening cuts in the user cut callback) if I had a recipe for generating bound tightening cuts. Typically, though, I do not know any special way to tighten bounds. CPLEX is good at generating the common types of cuts without my help.

I once did an experiment in which I added the Benders cuts in a lazy constraint callback, queued them within my code, and then added them again in a user cut callback. The reasoning was that the lazy constraints were not being used to tighten node bounds. I thought that by adding duplicates of them as user cuts, progress on the bound would be faster. It was not. That's just one experiment, so I can't rule out the value of doing that in some other case, but I'm not optimistic about it in general.

On to your second question. Any time you add a user cut, CPLEX will (hopefully) update the solution to the LP relaxation at that node and will then invoke the callback again at the same node, in case you want to add more cuts. This goes on until the user cut callback exits without adding a cut, which tells CPLEX it is time to move on. So you should check your code to make sure that it is working with the updated LP solution after the cut is added for the first time. Assuming it is, you might check whether the updated LP solution looks to CPLEX to be satisfying the new cut to within tolerances but looks to your code not to be satisfying the new cut (due to a difference in tolerances). For instance, if you test for something being zero or being nonnegative, you might need to modify the test to being within epsilon of zero or being greater or equal to -epsilon.

Dear Paul,

DeleteThanks for the comments. It is more clear to me now. I will try to test my code considering your comments.

Regarding my code and its error when I include user cut callbacks, as I said I put the benders, feasibility and optimality, cuts in the user cut callback. May I ask you in your opinions, is there any chance from theory point of view that putting benders cuts inside the user cut callback may cause this error? Or the code itself has some problem?

The reason to ask this is that when I look at the benders sample file of Cplex (Ilobendersatsp.cpp), I can't notice any difference in the way of coding to deal with user cut compared to the lazy constraints. The only difference is that at the beginning of the macro function of the user cut the following command line is added:

if ( !isAfterCutLoop() )

return;

The rest of the code is exactly the same when it comes to deal with the lay constraints.

Best regards,

Alain

Dear Prof. Rubin,

ReplyDeleteThis is an excellent blog that I follow for some time. (In fact, once I saw you at INFORMS AM passing by.)

I understand the difference between lazy constraints and user cuts.

However, in CPLEX 20.1, I got a little bit confused with the generic callbacks: generic callbacks: https://www.ibm.com/docs/en/icos/20.1.0?topic=callbacks-what-can-you-do-generic-callback.

The example xbendersatsp2.c uses CPXcallbackrejectcandidate to add the cuts. However, in the description of CPXcallbackrejectcandidate it mentions:

"There is no guarantee that CPLEX will use the constraints that you specify. CPLEX will try to do so, but for technical reasons, it is not always possible to use the constraints. You can thus not assume that in subsequent callback invocations the candidate solutions satisfy the constraints you specified here."

This statement goes against my idea of lazy constraints, which are used to accept/reject a new incumbent after begin added to the B&C.

Somehow, it seems that he constraints added through CPXcallbackrejectcandidate eliminate the current integer solution but may not be used after.

So, using CPXcallbackrejectcandidate we can end with a solution that does not respect the constraints added by that function?

What is the impact of this procedure in the Benders decomposition using one master problem?

On one hand, every time you get an integer solution you validate it with CPXcallbackrejectcandidate. On the other hand, you want the constraints added through that function to guide to feasible integer solutions.

Can you please comment on these issues?

Many thanks,

Ricardo Lima

First, thanks for the kind words. I am not at this year's INFORMS national meeting, but if you spot me at a future meeting, please feel free to say 'hi'.

ReplyDeleteAs to your question, this gets into some technical stuff that I'm not fully qualified to answer because (a) I don't know all the details of how solvers work (much as I don't know exactly how an internal combustion engine works, but still manage to drive my car) and (b) it involves some details that I'm pretty sure IBM considers trade secrets. That said, I think it is at least in part a question of how CPLEX handles preprocessing. The problem CPLEX is solving is different from the one you fed it. When the callback is invoked, CPLEX essentially translates the solution it found to fit your original model, and when you add new lazy constraints it tries to translate those to fit the model it is actually solving. Apparently the latter step is not guaranteed to succeed.

The good news is that, should it fail to enforce the lazy constraints and land on another candidate solution which violates them, the callback will again be invoked, and you will have the opportunity to "rediscover" those constraints. So there should be no danger of getting a final solution that is infeasible; the only danger is that the solution process may be inefficient due to unnecessary repetition.

I don't think I've ever checked whether a lazy constraint callback (legacy or generic) is forced to rediscover the same constraints, but my feeling is that it is at worst a rare occurrence. If for some reason you think it is happening frequently in one of your problems, and assuming the effort involved in generating the lazy constraints is nontrivial, a possible workaround is to store your lazy constraints in user memory. When the callback is invoked in the candidate context, you can start by evaluating all your stored lazy constraints to see if any are violated. If so, just reject the candidate. If not, go ahead and do the computations to see if the candidate is feasible or if a new violated lazy constraint can be found.