An interesting question recently popped up on Operations Research Stack Exchange. The setting is a graph $G=(V,E)$ in which path length is defined to be the number of edges on the path (i.e., all edges have weight 1). The problem is to select a subset $S\subset V$ of vertices with a specified cardinality $k$ so as to minimize the sum over all nodes of the distance from each node to the closest selected node. I will assume the graph is connected, since otherwise the objective might be undefined.

The author of the original question indicated in a comment that the context for the problem is something involving social networks, and in another comment indicated that the diameters of the graphs are relatively constant regardless of graph size. The diameter of $G$, which I will denote by $\delta(G),$ is the maximum distance between any pair of vertices in $V.$ I will denote by $D$ the set $\left\{ 0,1,\dots,\delta(G)\right\} .$

The author posed the following integer programming model, in which $x_{v,d}\in\left\{ 0,1\right\} $ is 1 if vertex $v$ has distance $d$ to the nearest selected vertex and 0 otherwise. Note that $x_{v,0}=1$ if and only if $v\in S.$ \begin{align*}

\min_{x} & \sum_{v\in V}\sum_{d\in D}d\cdot x_{v,d}\\

\text{s.t.} & \sum_{v\in V}x_{v,0}=k & (1)\\

& \sum_{d\in D}x_{v,d}=1\quad\forall v\in V & (2)\\

& x_{v,d}\le\sum_{u\in N_{d}(v)}x_{u,0}\quad\forall v\in V,\forall d\in D\backslash\left\{ 0\right\} & (3)

\end{align*}where $N_{d}(v)\subset V$ is the set of all nodes at (shortest) distance $d$ from $v.$ Constraint (1) ensures that $S$ has the correct cardinality, constraint (2) ensures that the distance of any node from $S$ is uniquely defined, and constraint (3) ensures that a node is at distance $d$ from $S$ only if at least one node at distance $d$ belongs to $S.$ I will refer to this as the "distance model".

The author was asking about a possible incremental approach, but I got curious about alternative models. Frequent forum contributor Rob Pratt suggested changing constraint (3) to $$x_{v,d}\le\sum_{u\in N_{1}(v)}x_{u,d-1}\quad\forall v\in V,\forall d\in D\backslash\left\{ 0\right\} \quad(3'),$$

which says that for a node $v$ to be at distance $d,$ one of its neighbors must be at distance $d-1.$ I will call that "distance model 2". Meanwhile, I thought it might help to leave $x_{v,0}$ binary but make $x_{v,d}\in\left[0,1\right]$ continuous for $d>0$ (which might or might not be equivalent to using branching priorities

to ensure that the $x_{v,0}$ variables were branched on before any

of the other variables). I will call that "distance model 3".

Someone else suggested what I will call the "assignment model", which uses one set of binary variables $x_{v}$ to determine which vertices are selected to be in $S$ and a second set if binary variables $y_{v,u}$ to indicate whether vertex $u$ is the closest vertex in $S$ to vertex $v.$ That model is as follows:\begin{align*}

\min_{x,y} & \sum_{u,v\in V}d_{v,u}y_{v,u}\\

\text{s.t.} & \sum_{v\in V}x_{v}=k & (4)\\

& \sum_{u\in V}y_{v,u}=1\quad\forall v\in V & (5)\\

& y_{v,u}\le x_{u}\quad\forall v,u\in V & (6)

\end{align*}where (4) enforces the size requirement for $S$, (5) stipulates that every vertex is assigned to a single selected vertex (possibly itself) and (6) makes sure that the assigned selected vertex is actually selected.

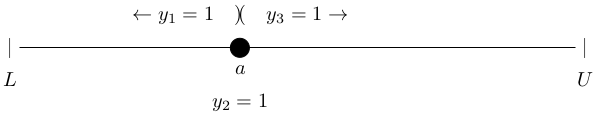

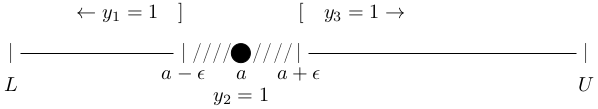

Lastly, I came up with yet another model, which I will call the "flow model" since it is based on treating selected vertices as sinks and vertices outside $S$ as sources in a flow model. It uses binary variables $x_{v}$ to indicate whether a vertex is selected and continuous variables $y_{u,v}\in\left[0,\vert V\vert-k\right]$ for flow volumes. Since the edges are bidirectional, for each edge $(u,v)\in E$ there will be two flow variables, $y_{u,v}$ and $y_{v,u}$ (only one of which will be nonzero in the optimal solution). The idea is that each vertex that is not selected passes along any flow arriving at it plus one unit of new flow. Selected vertices soak up whatever flow comes in. We minimize the sum of the aggregate flows across all edges, which effectively charges each unit of flow 1 for each edge it crosses. The optimal solution will therefore send each unit of flow to the selected vertex (sink) closest to its source, making the cost of each unit of flow equal to the distance from source to nearest selected vertex. That model is as follows.\begin{align*}

\min_{x,y} & \sum_{(u,v)\in E}\left(y_{u,v}+y_{v,u}\right)\\

\text{s.t.} & \sum_{v\in V}x_{v}=k & (7)\\

& \sum_{u\in N_{1}(v)}\left(y_{v,u}-y_{u,v}\right)\ge1-\left(\vert V\vert-k\right)\cdot x_{v}\quad\forall v\in V & (8).

\end{align*}The by now familiar constraint (7) sets the size of the selected set. Constraint (8) says that the flow out of any vertex $v$ must be one greater than the flow in \emph{unless} the vertex is selected (in which case nothing needs to flow out of it).

To assess how the various models perform, I coded them in Java using CPLEX 22.1 as the solver and ran a few experiments on randomly generated graphs. I did not do nearly enough experiments for any definitive conclusions, but I will quote the results of one that I think is somewhat informative. The test graph has $\vert V\vert=2,000,$ $\vert E\vert=23,360$ and diameter $\delta(G)=5.$ Each model was run for 10 minutes (not including the time spent constructing the model). The next table summarizes the results.

|

Distance |

Distance2 |

Distance3 |

Assignment |

Flow |

| Binary variables |

9,976 |

12,000 |

2,000 |

4,002,000? |

2,000 |

| Total columns |

9,976 |

12,000 |

9,976 |

4,002,000? |

48,720 |

| Total rows |

9,977 |

12,001 |

9,977 |

2,001? |

2,001 |

| Nonzero coefficients |

4,017,952 |

257,600 |

4,017,952 |

12,002,000? |

97,440 |

| Objective value |

3,202 |

3,192 |

3,181 |

none |

3,506 |

| Lower bound |

3,139.7 |

3,139.2 |

3,143.3 |

none |

1,980 |

None of the models reach proven optimality, and in fact the assignment model hit the time limit early in the presolve phase, before CPLEX printed any statistics about dimensions. All the output I got was that presolve "has eliminated 0 rows and 0 columns..." The dimensions I listed are the dimensions for the paper model, before any presolve reductions.

If you are wondering about why the "Distance2" model (which is the original distance model with Rob's modification to constraint (3)) has larger row and column dimensions than the original, it turns out the both start with the same initial dimensions but the CPLEX presolver removes a bunch of rows and columns in the original model that it cannot remove from Rob's version. Still, Rob's version winds up with an order of magnitude fewer nonzero coefficients than the original version (or my tweak to it, "Distance3").

Based on this and a few other preliminary runs, there are a handful of takeaways that I am somewhat comfortable in saying.

- As graph size grows, the assignment model fairly quickly becomes too large to use. Hypothetically it could reach proven optimum faster than the others given a liberal time limit, but the betting line is against that.

- Of the remaining models, my flow model has the most columns but the fewest rows and the fewest nonzeros. Unfortunately, it also has the worst performance. On a couple of other tests it did better, at least early on, with the incumbent solution value, but it seems to have the weakest bounds. In the interest of salvaging a little bit of my bruised pride, I will note that fewest nonzeros means that, as the graph grows, it might at some point be the only one of these models to fit in memory.

- The tweak I made to the original model ("Distance3") did best in both incumbent value and bound ... on this example ... within the 10 minute run time limit. There is no guarantee this holds up in other cases, and it ties with the original model for largest constraint matrix (excluding the assignment model).

- Rob's tweak has more rows and columns than the original model (or my tweak), but not hugely more, and it has the fewest nonzeros among the distance models. So it is definitely a contender for the production model, at least pending more testing.

Source code (in Java) that generates a random graph and tests the model is available from

my repository.